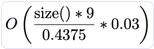

1 2<img src="https://github.com/greg7mdp/parallel-hashmap/blob/master/html/img/phash.png?raw=true" width="120" align="middle"> 3 4# The Parallel Hashmap [](https://travis-ci.org/greg7mdp/parallel-hashmap) [](https://ci.appveyor.com/project/greg7mdp/parallel-hashmap) 5 6## Overview 7 8This repository aims to provide a set of excellent **hash map** implementations, as well as a **btree** alternative to std::map and std::set, with the following characteristics: 9 10- **Header only**: nothing to build, just copy the `parallel_hashmap` directory to your project and you are good to go. 11 12- **drop-in replacement** for `std::unordered_map`, `std::unordered_set`, `std::map` and `std::set` 13 14- Compiler with **C++11 support** required, **C++14 and C++17 APIs are provided (such as `try_emplace`)** 15 16- **Very efficient**, significantly faster than your compiler's unordered map/set or Boost's, or than [sparsepp](https://github.com/greg7mdp/sparsepp) 17 18- **Memory friendly**: low memory usage, although a little higher than [sparsepp](https://github.com/greg7mdp/sparsepp) 19 20- Supports **heterogeneous lookup** 21 22- Easy to **forward declare**: just include `phmap_fwd_decl.h` in your header files to forward declare Parallel Hashmap containers [note: this does not work currently for hash maps with pointer keys] 23 24- **Dump/load** feature: when a `flat` hash map stores data that is `std::trivially_copyable`, the table can be dumped to disk and restored as a single array, very efficiently, and without requiring any hash computation. This is typically about 10 times faster than doing element-wise serialization to disk, but it will use 10% to 60% extra disk space. See `examples/serialize.cc`. _(flat hash map/set only)_ 25 26- **Tested** on Windows (vs2015 & vs2017, vs2019, Intel compiler 18 and 19), linux (g++ 4.8.4, 5, 6, 7, 8, clang++ 3.9, 4.0, 5.0) and MacOS (g++ and clang++) - click on travis and appveyor icons above for detailed test status. 27 28- Automatic support for **boost's hash_value()** method for providing the hash function (see `examples/hash_value.h`). Also default hash support for `std::pair` and `std::tuple`. 29 30- **natvis** visualization support in Visual Studio _(hash map/set only)_ 31 32@byronhe kindly provided this [Chinese translation](https://byronhe.com/post/2020/11/10/parallel-hashmap-btree-fast-multi-thread-intro/) of the README.md. 33 34 35## Fast *and* memory friendly 36 37Click here [For a full writeup explaining the design and benefits of the Parallel Hashmap](https://greg7mdp.github.io/parallel-hashmap/). 38 39The hashmaps and btree provided here are built upon those open sourced by Google in the Abseil library. The hashmaps use closed hashing, where values are stored directly into a memory array, avoiding memory indirections. By using parallel SSE2 instructions, these hashmaps are able to look up items by checking 16 slots in parallel, allowing the implementation to remain fast even when the table is filled up to 87.5% capacity. 40 41> **IMPORTANT:** This repository borrows code from the [abseil-cpp](https://github.com/abseil/abseil-cpp) repository, with modifications, and may behave differently from the original. This repository is an independent work, with no guarantees implied or provided by the authors. Please visit [abseil-cpp](https://github.com/abseil/abseil-cpp) for the official Abseil libraries. 42 43## Installation 44 45Copy the parallel_hashmap directory to your project. Update your include path. That's all. 46 47If you are using Visual Studio, you probably want to add `phmap.natvis` to your projects. This will allow for a clear display of the hash table contents in the debugger. 48 49> A cmake configuration files (CMakeLists.txt) is provided for building the tests and examples. Command for building and running the tests is: `mkdir build && cd build && cmake -DPHMAP_BUILD_TESTS=ON -DPHMAP_BUILD_EXAMPLES=ON .. && cmake --build . && make test` 50 51## Example 52 53```c++ 54#include <iostream> 55#include <string> 56#include <parallel_hashmap/phmap.h> 57 58using phmap::flat_hash_map; 59 60int main() 61{ 62 // Create an unordered_map of three strings (that map to strings) 63 flat_hash_map<std::string, std::string> email = 64 { 65 { "tom", "tom@gmail.com"}, 66 { "jeff", "jk@gmail.com"}, 67 { "jim", "jimg@microsoft.com"} 68 }; 69 70 // Iterate and print keys and values 71 for (const auto& n : email) 72 std::cout << n.first << "'s email is: " << n.second << "\n"; 73 74 // Add a new entry 75 email["bill"] = "bg@whatever.com"; 76 77 // and print it 78 std::cout << "bill's email is: " << email["bill"] << "\n"; 79 80 return 0; 81} 82``` 83 84## Various hash maps and their pros and cons 85 86The header `parallel_hashmap/phmap.h` provides the implementation for the following eight hash tables: 87- phmap::flat_hash_set 88- phmap::flat_hash_map 89- phmap::node_hash_set 90- phmap::node_hash_map 91- phmap::parallel_flat_hash_set 92- phmap::parallel_flat_hash_map 93- phmap::parallel_node_hash_set 94- phmap::parallel_node_hash_map 95 96The header `parallel_hashmap/btree.h` provides the implementation for the following btree-based ordered containers: 97- phmap::btree_set 98- phmap::btree_map 99- phmap::btree_multiset 100- phmap::btree_multimap 101 102The btree containers are direct ports from Abseil, and should behave exactly the same as the Abseil ones, modulo small differences (such as supporting std::string_view instead of absl::string_view, and being forward declarable). 103 104When btrees are mutated, values stored within can be moved in memory. This means that pointers or iterators to values stored in btree containers can be invalidated when that btree is modified. This is a significant difference with `std::map` and `std::set`, as the std containers do offer a guarantee of pointer stability. The same is true for the 'flat' hash maps and sets. 105 106The full types with template parameters can be found in the [parallel_hashmap/phmap_fwd_decl.h](https://raw.githubusercontent.com/greg7mdp/parallel-hashmap/master/parallel_hashmap/phmap_fwd_decl.h) header, which is useful for forward declaring the Parallel Hashmaps when necessary. 107 108**Key decision points for hash containers:** 109 110- The `flat` hash maps will move the keys and values in memory. So if you keep a pointer to something inside a `flat` hash map, this pointer may become invalid when the map is mutated. The `node` hash maps don't, and should be used instead if this is a problem. 111 112- The `flat` hash maps will use less memory, and usually be faster than the `node` hash maps, so use them if you can. the exception is when the values inserted in the hash map are large (say more than 100 bytes [*needs testing*]) and costly to move. 113 114- The `parallel` hash maps are preferred when you have a few hash maps that will store a very large number of values. The `non-parallel` hash maps are preferred if you have a large number of hash maps, each storing a relatively small number of values. 115 116- The benefits of the `parallel` hash maps are: 117 a. reduced peak memory usage (when resizing), and 118 b. multithreading support (and inherent internal parallelism) 119 120**Key decision points for btree containers:** 121 122Btree containers are ordered containers, which can be used as alternatives to `std::map` and `std::set`. They store multiple values in each tree node, and are therefore more cache friendly and use significantly less memory. 123 124Btree containers will usually be preferable to the default red-black trees of the STL, except when: 125- pointer stability or iterator stability is required 126- the value_type is large and expensive to move 127 128When an ordering is not needed, a hash container is typically a better choice than a btree one. 129 130## Changes to Abseil's hashmaps 131 132- The default hash framework is std::hash, not absl::Hash. However, if you prefer the default to be the Abseil hash framework, include the Abseil headers before `phmap.h` and define the preprocessor macro `PHMAP_USE_ABSL_HASH`. 133 134- The `erase(iterator)` and `erase(const_iterator)` both return an iterator to the element following the removed element, as does the std::unordered_map. A non-standard `void _erase(iterator)` is provided in case the return value is not needed. 135 136- No new types, such as `absl::string_view`, are provided. All types with a `std::hash<>` implementation are supported by phmap tables (including `std::string_view` of course if your compiler provides it). 137 138- The Abseil hash tables internally randomize a hash seed, so that the table iteration order is non-deterministic. This can be useful to prevent *Denial Of Service* attacks when a hash table is used for a customer facing web service, but it can make debugging more difficult. The *phmap* hashmaps by default do **not** implement this randomization, but it can be enabled by adding `#define PHMAP_NON_DETERMINISTIC 1` before including the header `phmap.h` (as is done in raw_hash_set_test.cc). 139 140- Unlike the Abseil hash maps, we do an internal mixing of the hash value provided. This prevents serious degradation of the hash table performance when the hash function provided by the user has poor entropy distribution. The cost in performance is very minimal, and this helps provide reliable performance even with *imperfect* hash functions. 141 142 143## Memory usage 144 145| type | memory usage | additional *peak* memory usage when resizing | 146|-----------------------|-------------------|-----------------------------------------------| 147| flat tables |  |  | 148| node tables |  |  | 149| parallel flat tables |  |  | 150| parallel node tables |  |  | 151 152 153- *size()* is the number of values in the container, as returned by the size() method 154- *load_factor()* is the ratio: `size() / bucket_count()`. It varies between 0.4375 (just after the resize) to 0.875 (just before the resize). The size of the bucket array doubles at each resize. 155- the value 9 comes from `sizeof(void *) + 1`, as the *node* hash maps store one pointer plus one byte of metadata for each entry in the bucket array. 156- flat tables store the values, plus one byte of metadata per value), directly into the bucket array, hence the `sizeof(C::value_type) + 1`. 157- the additional peak memory usage (when resizing) corresponds the the old bucket array (half the size of the new one, hence the 0.5), which contains the values to be copied to the new bucket array, and which is freed when the values have been copied. 158- the *parallel* hashmaps, when created with a template parameter N=4, create 16 submaps. When the hash values are well distributed, and in single threaded mode, only one of these 16 submaps resizes at any given time, hence the factor `0.03` roughly equal to `0.5 / 16` 159 160## Iterator invalidation for hash containers 161 162The rules are the same as for `std::unordered_map`, and are valid for all the phmap hash containers: 163 164 165| Operations | Invalidated | 166|-------------------------------------------|----------------------------| 167| All read only operations, swap, std::swap | Never | 168| clear, rehash, reserve, operator= | Always | 169| insert, emplace, emplace_hint, operator[] | Only if rehash triggered | 170| erase | Only to the element erased | 171 172## Iterator invalidation for btree containers 173 174Unlike for `std::map` and `std::set`, any mutating operation may invalidate existing iterators to btree containers. 175 176 177| Operations | Invalidated | 178|-------------------------------------------|----------------------------| 179| All read only operations, swap, std::swap | Never | 180| clear, operator= | Always | 181| insert, emplace, emplace_hint, operator[] | Yes | 182| erase | Yes | 183 184## Example 2 - providing a hash function for a user-defined class 185 186In order to use a flat_hash_set or flat_hash_map, a hash function should be provided. This can be done with one of the following methods: 187 188- Provide a hash functor via the HashFcn template parameter 189 190- As with boost, you may add a `hash_value()` friend function in your class. 191 192For example: 193 194```c++ 195#include <parallel_hashmap/phmap_utils.h> // minimal header providing phmap::HashState() 196#include <string> 197using std::string; 198 199struct Person 200{ 201 bool operator==(const Person &o) const 202 { 203 return _first == o._first && _last == o._last && _age == o._age; 204 } 205 206 friend size_t hash_value(const Person &p) 207 { 208 return phmap::HashState().combine(0, p._first, p._last, p._age); 209 } 210 211 string _first; 212 string _last; 213 int _age; 214}; 215``` 216 217- Inject a specialization of `std::hash` for the class into the "std" namespace. We provide a convenient and small header `phmap_utils.h` which allows to easily add such specializations. 218 219For example: 220 221### file "Person.h" 222 223```c++ 224#include <parallel_hashmap/phmap_utils.h> // minimal header providing phmap::HashState() 225#include <string> 226using std::string; 227 228struct Person 229{ 230 bool operator==(const Person &o) const 231 { 232 return _first == o._first && _last == o._last && _age == o._age; 233 } 234 235 string _first; 236 string _last; 237 int _age; 238}; 239 240namespace std 241{ 242 // inject specialization of std::hash for Person into namespace std 243 // ---------------------------------------------------------------- 244 template<> struct hash<Person> 245 { 246 std::size_t operator()(Person const &p) const 247 { 248 return phmap::HashState().combine(0, p._first, p._last, p._age); 249 } 250 }; 251} 252``` 253 254The `std::hash` specialization for `Person` combines the hash values for both first and last name and age, using the convenient phmap::HashState() function, and returns the combined hash value. 255 256### file "main.cpp" 257 258```c++ 259#include "Person.h" // defines Person with std::hash specialization 260 261#include <iostream> 262#include <parallel_hashmap/phmap.h> 263 264int main() 265{ 266 // As we have defined a specialization of std::hash() for Person, 267 // we can now create sparse_hash_set or sparse_hash_map of Persons 268 // ---------------------------------------------------------------- 269 phmap::flat_hash_set<Person> persons = 270 { { "John", "Mitchell", 35 }, 271 { "Jane", "Smith", 32 }, 272 { "Jane", "Smith", 30 }, 273 }; 274 275 for (auto& p: persons) 276 std::cout << p._first << ' ' << p._last << " (" << p._age << ")" << '\n'; 277 278} 279``` 280 281 282## Thread safety 283 284Parallel Hashmap containers follow the thread safety rules of the Standard C++ library. In Particular: 285 286- A single phmap hash table is thread safe for reading from multiple threads. For example, given a hash table A, it is safe to read A from thread 1 and from thread 2 simultaneously. 287 288- If a single hash table is being written to by one thread, then all reads and writes to that hash table on the same or other threads must be protected. For example, given a hash table A, if thread 1 is writing to A, then thread 2 must be prevented from reading from or writing to A. 289 290- It is safe to read and write to one instance of a type even if another thread is reading or writing to a different instance of the same type. For example, given hash tables A and B of the same type, it is safe if A is being written in thread 1 and B is being read in thread 2. 291 292- The *parallel* tables can be made internally thread-safe for concurrent read and write access, by providing a synchronization type (for example [std::mutex](https://en.cppreference.com/w/cpp/thread/mutex)) as the last template argument. Because locking is performed at the *submap* level, a high level of concurrency can still be achieved. Read access can be done safely using `if_contains()`, which passes a reference value to the callback while holding the *submap* lock. Similarly, write access can be done safely using `modify_if`, `try_emplace_l` or `lazy_emplace_l`. However, please be aware that iterators or references returned by standard APIs are not protected by the mutex, so they cannot be used reliably on a hash map which can be changed by another thread. 293 294- Examples on how to use various mutex types, including boost::mutex, boost::shared_mutex and absl::Mutex can be found in `examples/bench.cc` 295 296 297## Using the Parallel Hashmap from languages other than C++ 298 299While C++ is the native language of the Parallel Hashmap, we welcome bindings making it available for other languages. One such implementation has been created for Python and is described below: 300 301- [GetPy - A Simple, Fast, and Small Hash Map for Python](https://github.com/atom-moyer/getpy): GetPy is a thin and robust binding to The Parallel Hashmap (https://github.com/greg7mdp/parallel-hashmap.git) which is the current state of the art for minimal memory overhead and fast runtime speed. The binding layer is supported by PyBind11 (https://github.com/pybind/pybind11.git) which is fast to compile and simple to extend. Serialization is handled by Cereal (https://github.com/USCiLab/cereal.git) which supports streaming binary serialization, a critical feature for the large hash maps this package is designed to support. 302 303## Acknowledgements 304 305Many thanks to the Abseil developers for implementing the swiss table and btree data structures (see [abseil-cpp](https://github.com/abseil/abseil-cpp)) upon which this work is based, and to Google for releasing it as open-source. 306